Computers Generally Use Continuous Digital Signals That Differentiated From Discrete Analog Signals

Difference between Analog and Digital Signal

An electrical or electromagnetic quantity (current, voltage, radio wave, micro wave, etc.) that carries data or information from one system (or network) to another is called a signal. Two basic types of signals are used for carrying data, viz. analog signal and digital signal.

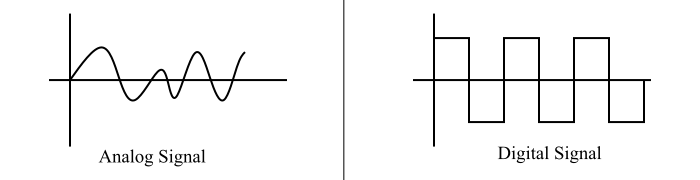

Analog and digital signals are different from each other in many aspects. One major difference between the two signals is that an analog signal is a continuous function of time, whereas a digital signal is a discrete function of time.

This article explains all the significant differences between analog and digital signals along with a brief description of analog signal and digital signal.

What is an Analog Signal?

A signal which is a continuous function of time and used to carry the information is known as an analog signal. An analog signalrepresents a quantity analogous to another quantity, for example, in case of an analog audio signal, the instantaneous value of signal voltage represents the pressure of the sound wave.

Analog signals utilize the properties of medium to convey the information. All the natural signals are the examples of analog signals. However, the analog signals are more susceptible to the electronic noise and distortion which can degrade the quality of the signal.

What is a Digital Signal?

A signal that is discrete function of time, i.e. which is not a continuous signal, is known as a digital signal. The digital signals are represented in the binary form and consist of different values of voltage at discrete instants of time.

Basically, a digital signal represents the data and information as a sequence of separate values at any given time. The digital signal can only take on one of a finite number of values.

Difference between Analog and Digital Signal

The following table shows all the significant differences between analog signals and digital signals −

| Parameter | Analog Signal | Digital Signal |

|---|---|---|

| Definition | A signal for conveying information which is a continuous function of time is known as analog signal. | A signal which is a discrete function of time, i.e. non-continuous signal, is known as digital signal. |

| Typical representation | An analog signal is typically represented by a sine wave function. There are many more representations for the analog signals also. | The typical representation of a signal is given by a square wave function. |

| Signal values | Analog signals use a continuous range of values to represent the data and information. | Digital signals use discrete values (or discontinuous values), i.e. discrete 0 and 1, to represent the data and information. |

| Signal bandwidth | The bandwidth of an analog signal is low. | The bandwidth of a digital signal is relatively high. |

| Suitability | The analog signals are more suitable for transmission of audio, video and other information through the communication channels. | The digital signals are suitable for computing and digital electronic operations such as data storage, etc. |

| Effect of electronic noise | Analog signals get affected by the electronic noise easily. | The digital signals are more stable and less susceptible to noise than the analog signals. |

| Accuracy | Due to more susceptibility to the noise, the accuracy of analog signals is less. | The digital signals have high accuracy because they are immune from the noise. |

| Power consumption | Analog signals use more power for data transmission. | Digital signals use less power than analog signals for conveying the same amount of information. |

| Circuit components | Analog signals are processed by analog circuits whose major components are resistors, capacitors, inductors, etc. | Digital circuits are required for processing of digital signals whose main circuit components are transistors, logic gates, ICs, etc. |

| Observational errors | The analog signals give observational errors. | The digital signals do not given observational errors. |

| Examples | The common examples of analog signals are temperature, current, voltage, voice, pressure, speed, etc. | The common example of digital signal is the data store in a computer memory. |

| Applications | The analog signals are used in land line phones, thermometer, electric fan, volume knob of a radio, etc. | The digital signals are used in computers, keyboards, digital watches, smartphones, etc. |

Conclusion

Both analog and digital signals are extensively used in computing and communication systems for the storage and conveyance of information. However, there are many differences in both types of signals which are described in the above table. The most significant difference between analog and digital signals is that an analog signal is a continuous signal while a digital signal is a discontinuous signal.

Updated on 04-Jul-2022 08:31:10

- Related Questions & Answers

- Difference Between Analog and Digital Computer

- Difference between Analog and Digital Circuits

- Differences between Digital and Analog System.

- What are Analog and Digital signals?

- Analog & Digital Multimeter

- Microcontrollers and Digital Signal Processors

- Difference Between Digital Signature and Digital Certificate

- Explain Digital to Analog Conversion

- What is Analog to Digital Conversion?

- Difference Between Digital Signature and Electronic Signature

- What is the difference between Electronic signatures and Digital Signatures?

- Difference between DLP(Digital Light Processing) and LCD(Liquid Crystal Display) projectors.

- What is Analog to Analog Conversion (Modulation)?

- What is digital certificate and digital signature?

- Signals and Signal Handling

- How does the IPsec use digital certificates and digital signatures?

Source: https://www.tutorialspoint.com/difference-between-analog-and-digital-signal

0 Response to "Computers Generally Use Continuous Digital Signals That Differentiated From Discrete Analog Signals"

Post a Comment